Melody - An Expressive Virtual Marimba

At my very first VR hackathon, Evie Powell, Ph.D., of Verge of Brilliance, LLC, was developing a Marimba. We worked on separate projects at the event, but I was fortunate to work on this project later. I'm not a huge fan of skeuomorphic interfaces, but anything too big to lug around is a great idea.

Evie's Fmod implementation in Marimba was having some latency issues. Earlier that summer I had the pleasure of working with PureData and Unity in Rob Hamilton's Audio Games workshop at CCRMA. I suggested we try PureData.

After a proof of concept, Evie implemented my patches into Unity with LibPD. I hacked these together with the guidance of PureData creator Miller Puckette's book "The Theory and Technique of Electronic Music". We implemented a polyphonic sampler for the audio engine, featuring velocity sensitivity with hard and soft mallet articulations.

How I Made the Sampler

LibPD is a version of PureData that will embed in Unity3D. PureData is a visual programming environment for music and signal processing and is a fully featured programming language.

PureData "patches" are programs and routines that can be loaded via LibPD and communicate directly with Unity3D. Below is the main patch where it receives notes from the user interface in Unity. (look for the [r note] object) This patch begins by parsing out the note information and distributing it to a polyphony system that allows multiple notes (and the same note) to sound in up to 16 voices simultaneously.

After parsing out the note message from the interface and delegating to the correct voice, the message is repacked and sent to the correct voice.

Inside [pd sampvoices] there are sixteen instances of [sampvoice7] abstraction, each representing a voice that can be played in unison.

Below is the [sampvoice7] abstraction containing the sound playback portion of the voice. Upon receiving the note trigger from [poly], the voice does some math to transpose the pitch of the sample, as the same buffered sound is used to cover 3 semitones of the range. A modulo determines the transposition of each note, and adjusts the playback speed accordingly.

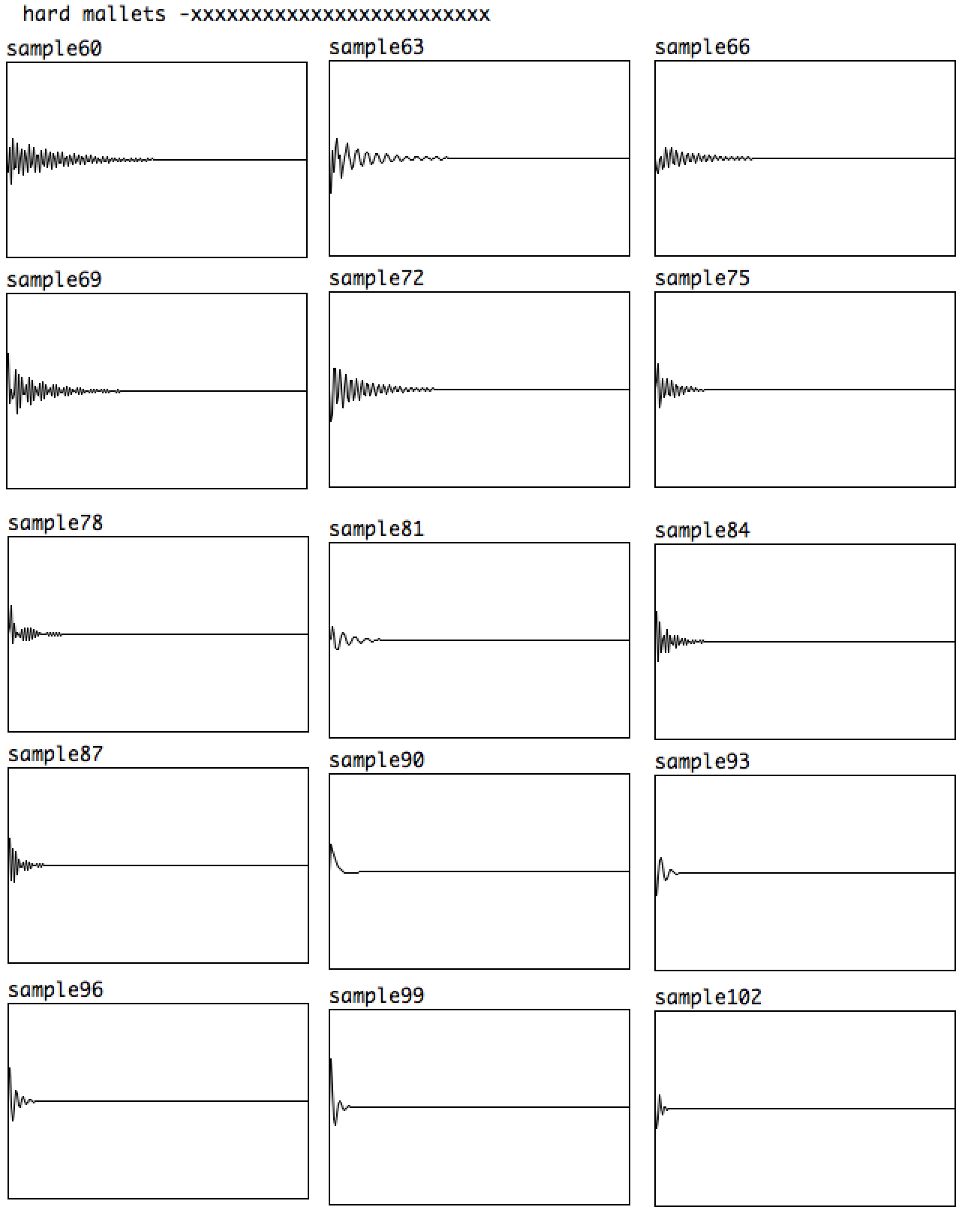

Pictured below are what some of the buffers look like:

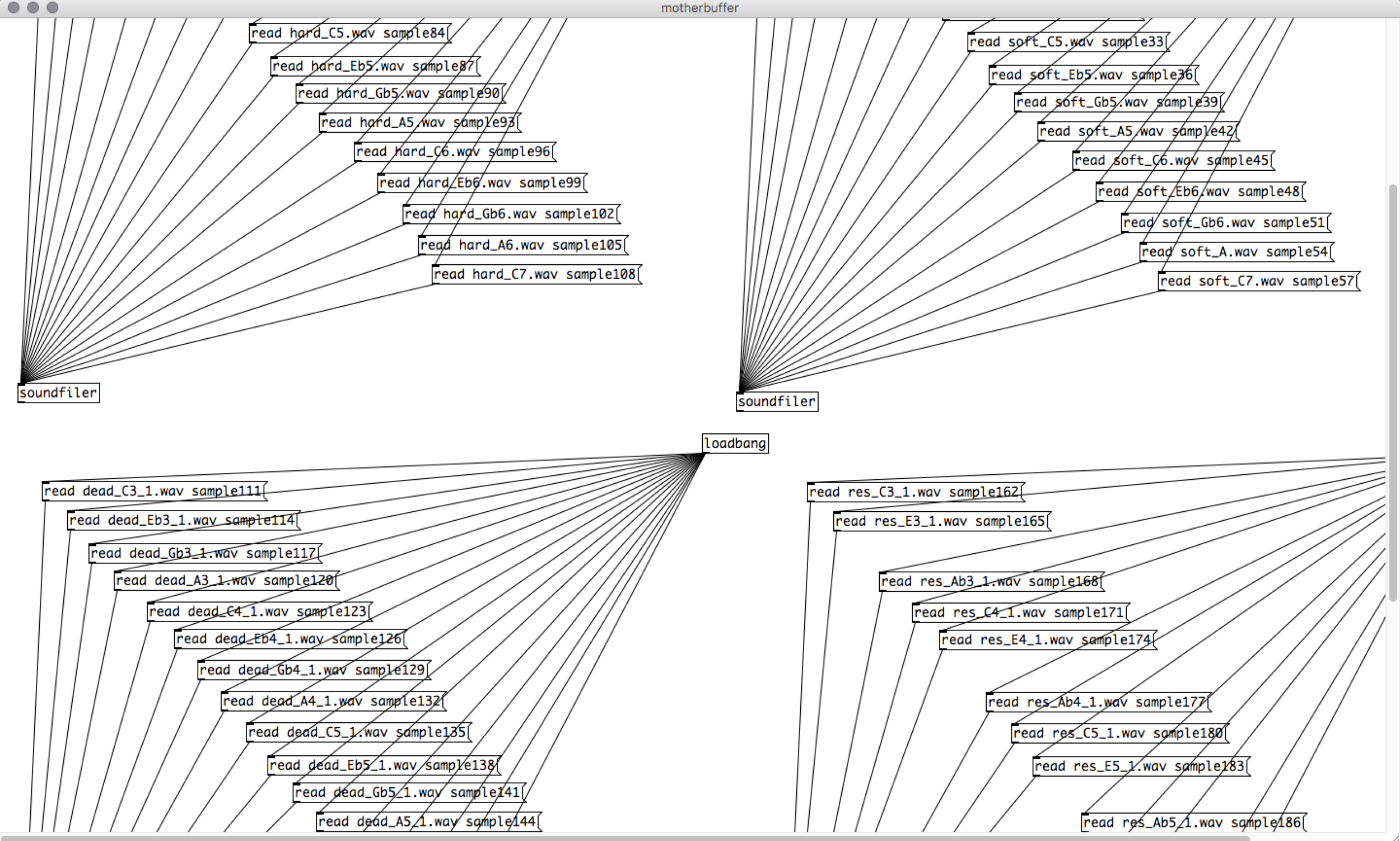

All buffers must be initialized upon loading the project:

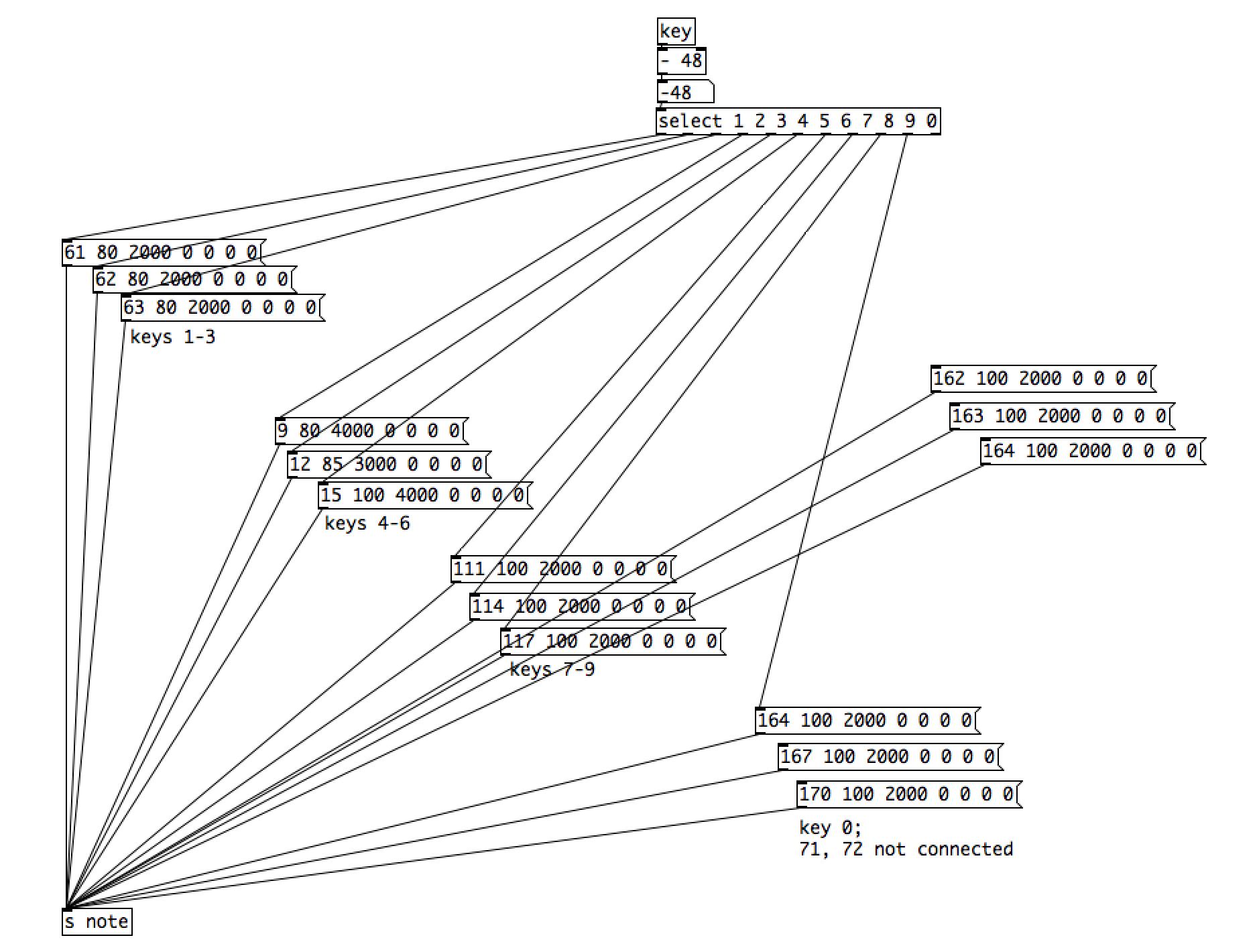

Keypresses were mapped to the various articulations, for unit testing before connecting to Unity3D.

Outcome

We were able to build an expressive musical instrument for VR and ship it on October 5th, 2017! Evie wrote a MIDI recording interface in a couple days (because she codes like a boss) and fleshed out some really awesome interactions for playing back your marimba riffs and overdubbing as you please. Currently, you can practice Marimba in multiple environments, and there's more to come!

To play Melody and see more videos, check it out on Steam here: http://store.steampowered.com/app/471200/Melody/